Charles Deledalle - Publications

Publications

Some of the publications below have appeared in an IEEE journal, a Springer journal, Elsevier journal or conference record. By allowing you to download them, I am required to post the following copyright reminder: "This material is presented to ensure timely dissemination of scholarly and technical work. Copyright and all rights therein are retained by authors or by other copyright holders. All persons copying this information are expected to adhere to the terms and constraints invoked by each author's copyright. In most cases, these works may not be reposted without the explicit permission of the copyright holder."

[See my publications on Scholar Google]

Papers in refereed journals (33)

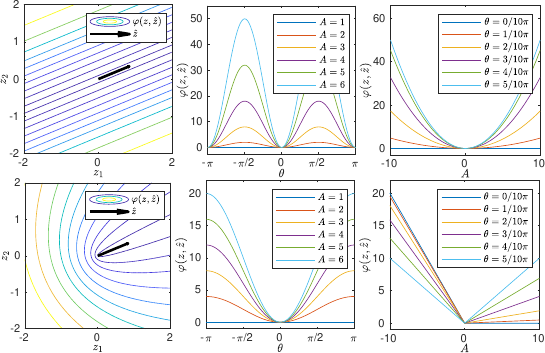

Charles-Alban Deledalle, Loïc Denis, Florence Tupin Journal of Mathematical Imaging and Vision, vol. 64, pp. 298-320, 2022 (Springer link, HAL) Synthetic aperture radar (SAR) images are widely used for Earth observation to complement optical imaging. By combining information on the polarization and the phase shift of the radar echos, SAR images offer high sensitivity to the geometry and materials that compose a scene. This information richness comes with a drawback inherent to all coherent imaging modalities: a strong signal-dependent noise called "speckle". This paper addresses the mathematical issues of performing speckle reduction in a transformed domain: the matrix-log domain. Rather than directly estimating noiseless covariance matrices, recasting the denoising problem in terms of the matrix-log of the covariance matrices stabilizes noise fluctuations and makes it possible to apply off-the-shelf denoising algorithms. We refine the method MuLoG by replacing heuristic procedures with exact expressions and improving the estimation strategy. This corrects a bias of the original method and should facilitate and encourage the adaptation of general-purpose processing methods to SAR imaging. |

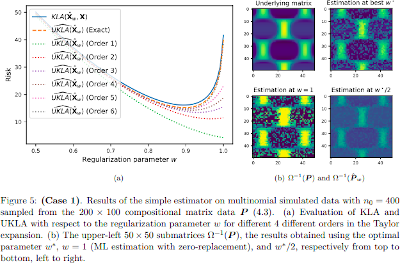

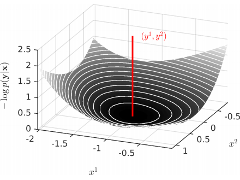

Jérémie Bigot, Charles Deledalle Computational Statistics and Data Analysis, vol. 169, pp. 107423, 2022 (ArXiv, Elsevier) This paper is concerned by the analysis of observations organized in a matrix form whose elements are count data assumed to follow a Poisson or a multinomial distribution. We focus on the estimation of either the intensity matrix (Poisson case) or the compositional matrix (multinomial case) that is assumed to have a low rank structure. We propose to construct an estimator minimizing the regularized negative log-likelihood by a nuclear norm penalty. Our approach easily yields a low-rank matrix-valued estimator with positive entries which belongs to the set of row-stochastic matrices in the multinomial case. Then, our main contribution is to propose a data-driven way to select the regularization parameter in the construction of such estimators by minimizing (approximately) unbiased estimates of the Kullback-Leibler (KL) risk in such models, which generalize Stein's unbiased risk estimation originally proposed for Gaussian data. The evaluation of these quantities is a delicate problem, and we introduce novel methods to obtain accurate numerical approximation of such unbiased estimates. Simulated data are used to validate this way of selecting regularizing parameters for low-rank matrix estimation from count data. Examples from a survey study and metagenomics also illustrate the benefits of our approach for real data analysis. |

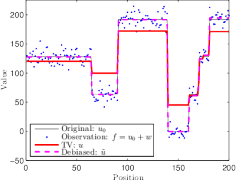

Charles-Alban Deledalle, Nicolas Papadakis, Joseph Salmon, Samuel Vaiter Journal of Mathematical Imaging and Vision, vol. 63, pp. 216-236, 2021 (ArXiv, Springer Link) In many linear regression problems, including ill-posed inverse problems in image restoration, the data exhibit some sparse structures that can be used to regularize the inversion. To this end, a classical path is to use l12 block based regularization. While efficient at retrieving the inherent sparsity patterns of the data - the support - the estimated solutions are known to suffer from a systematical bias. We propose a general framework for removing this artifact by refitting the solution towards the data while preserving key features of its structure such as the support. This is done through the use of refitting block penalties that only act on the support of the estimated solution. Based on an analysis of related works in the literature, we introduce a new penalty that is well suited for refitting purposes. We also present a new algorithm to obtain the refitted solution along with the original (biased) solution for any convex refitting block penalty. Experiments illustrate the good behavior of the proposed block penalty for refitting solutions of Total Variation and Total Generalized Variation models. |

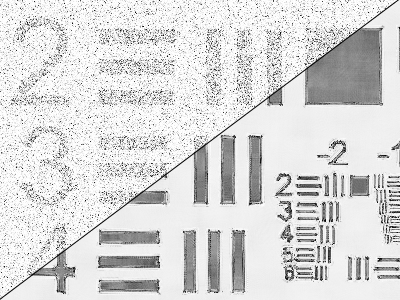

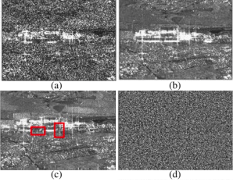

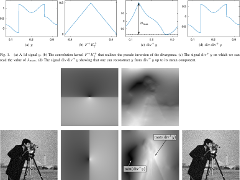

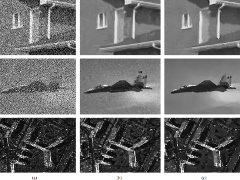

Charles-Alban Deledalle, Jérôme Gilles IET Image Processing, vol. 14, no. 14, pp. 3422-3432, Dec. 2020 (HAL, IET Digital Library) A new blind image deconvolution technique is developed for atmospheric turbulence deblurring. The originality of the proposed approach relies on an actual physical model, known as the Fried kernel, that quantifies the impact of the atmospheric turbulence on the optical resolution of images. While the original expression of the Fried kernel can seem cumbersome at first sight, we show that it can be reparameterized in a much simpler form. This simple expression allows us to efficiently embed this kernel in the proposed Blind Atmospheric TUrbulence Deconvolution (BATUD) algorithm. BATUD is an iterative algorithm that alternately performs deconvolution and estimates the Fried kernel by jointly relying on a Gaussian Mixture Model prior of natural image patches and controlling for the square Euclidean norm of the Fried kernel. Numerical experiments show that our proposed blind deconvolution algorithm behaves well in different simulated turbulence scenarios, as well as on real images. Not only BATUD outperforms state-of-the-art approaches used in atmospheric turbulence deconvolution in terms of image quality metrics, but is also faster. |

Michael J. Bianco, Peter Gerstoft, James Traer, Emma Ozanich, Marie A. Roch, Sharon Gannot, Charles-Alban Deledalle Journal of the Acoustical Society of America, vol. 5, no. 146, pp. 3590-3628, 2019 (ASA, ArXiv) Acoustic data provide scientific and engineering insights in fields ranging from biology and communications to ocean and Earth science. We survey the recent advances and transformative potential of machine learning (ML), including deep learning, in the field of acoustics. ML is a broad family of statistical techniques for automatically detecting and utilizing patterns in data. Relative to conventional acoustics and signal processing, ML is data-driven. Given sufficient training data, ML can discover complex relationships between features. With large volumes of training data, ML can discover models describing complex acoustic phenomena such as human speech and reverberation. ML in acoustics is rapidly developing with compelling results and significant future promise. We first introduce ML, then highlight ML developments in five acoustics research areas: source localization in speech processing, source localization in ocean acoustics, bioacoustics, seismic exploration, and environmental sounds in everyday scenes. |

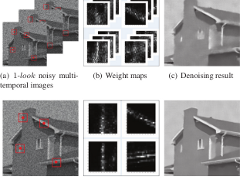

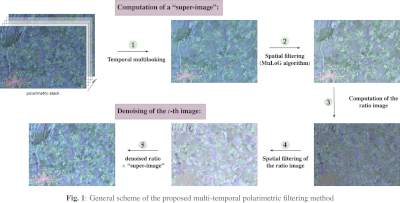

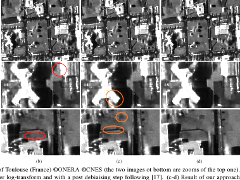

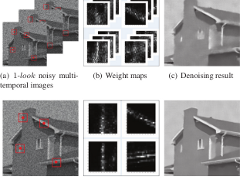

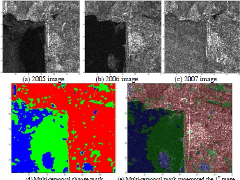

Weiying Zhao, Loïc Denis, Charles-Alban Deledalle, Henri Maitre, Jean-Marie Nicolas, Florence Tupin IEEE Transactions on Geoscience and Remote Sensing, vol. 57, no. 6, pp. 3552-3565, 2019 (HAL, IEEE Xplore) In this paper, we propose a generic multi-temporal SAR despeckling method to extend any single-image speckle reduction algorithm to multi-temporal stacks. Our method, RAtio-BAsed multi-temporal SAR despeckling (RABASAR), is based on ratios and fully exploits a "super-image" (i.e. temporal mean) in the process. The proposed approach can be divided into three steps: 1) calculation of the "super-image" through temporal averaging; 2) denoising the ratio images formed through dividing the noisy images by the "super-image" ; 3) computing denoised images by multiplying the denoised ratio images with the "super-image". Thanks to the spatial stationarity improvement in the ratio images, denoising these ratio images with a speckle-reduction method is more effective than denoising the original multi-temporal stack. The data volume to be processed is also reduced compared to other methods through the use of the "super-image". The comparison with several state-of-the-art reference methods shows numerically (peak signal-noise-ratio, structure similarity index) and visually better results both on simulated and real SAR stacks. The proposed ratio-based denoising framework successfully extends single-image SAR denoising methods in order to exploit the temporal information of a time series. |

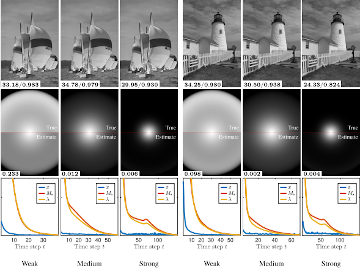

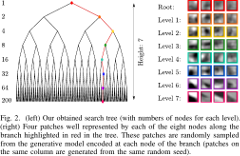

Shibin Parameswaran, Charles-Alban Deledalle, Loïc Denis, Truong Q. Nguyen IEEE Transactions on Image Processing, vol. 28, no. 2, pp. 687-698, 2019 (IEEE Xplore, recommended pdf, HAL, ArXiv) Presented at 5G and Beyond forum, May 2018, La Jolla, CA, USA (poster) Presented at LIRMM Seminar, Jan 2019, Montpellier, France (slides) Image restoration methods aim to recover the underlying clean image from corrupted observations. The Expected Patch Log-likelihood (EPLL) algorithm is a powerful image restoration method that uses a Gaussian mixture model (GMM) prior on the patches of natural images. Although it is very effective for restoring images, its high runtime complexity makes EPLL ill-suited for most practical applications. In this paper, we propose three approximations to the original EPLL algorithm. The resulting algorithm, which we call the fast-EPLL (FEPLL), attains a dramatic speed-up of two orders of magnitude over EPLL while incurring a negligible drop in the restored image quality (less than 0.5 dB). We demonstrate the efficacy and versatility of our algorithm on a number of inverse problems such as denoising, deblurring, super-resolution, inpainting and devignetting. To the best of our knowledge, FEPLL is the first algorithm that can competitively restore a 512x512 pixel image in under 0.5s for all the degradations mentioned above without specialized code optimizations such as CPU parallelization or GPU implementation. |

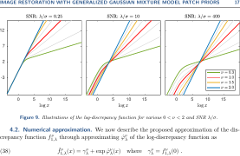

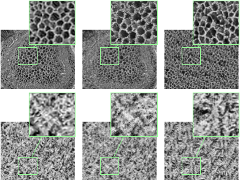

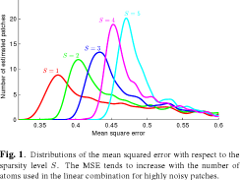

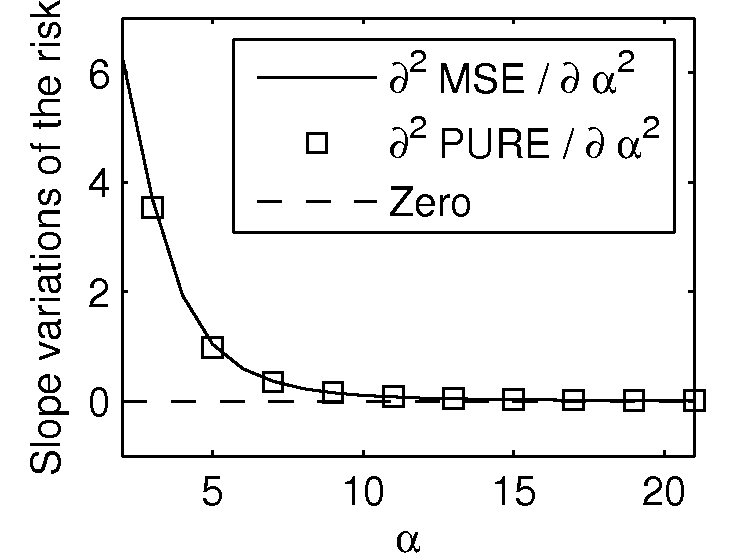

Charles-Alban Deledalle, Shibin Parameswaran, Truong Q. Nguyen SIAM Journal on Imaging Sciences, vol. 11, no. 4, pp. 2568-2609, 2018 (epubs SIAM, HAL, ArXiv) Presented at LIRMM Seminar, Jan 2019, Montpellier, France (slides) Patch priors have become an important component of image restoration. A powerful approach in this category of restoration algorithms is the popular Expected Patch Log-likelihood (EPLL) algorithm. EPLL uses a Gaussian mixture model (GMM) prior learned on clean image patches as a way to regularize degraded patches. In this paper, we show that a generalized Gaussian mixture model (GGMM) captures the underlying distribution of patches better than a GMM. Even though GGMM is a powerful prior to combine with EPLL, the non-Gaussianity of its components presents major challenges to be applied to a computationally intensive process of image restoration. Specifically, each patch has to undergo a patch classification step and a shrinkage step. These two steps can be efficiently solved with a GMM prior but are computationally impractical when using a GGMM prior. In this paper, we provide approximations and computational recipes for fast evaluation of these two steps, so that EPLL can embed a GGMM prior on an image with more than tens of thousands of patches. Our main contribution is to analyze the accuracy of our approximations based on thorough theoretical analysis. Our evaluations indicate that the GGMM prior is consistently a better fit for modeling image patch distribution and performs better on average in image denoising task. |

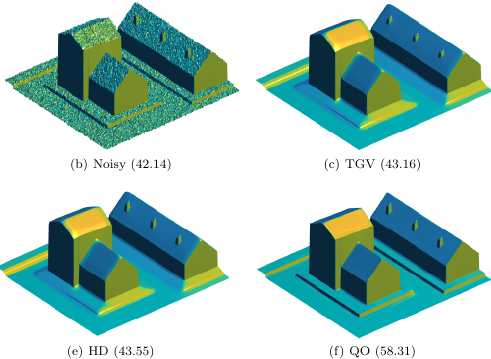

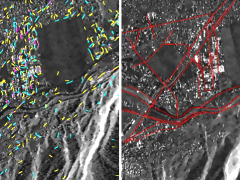

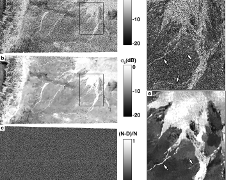

Giampaolo Ferraioli, Charles-Alban Deledalle, Loïc Denis, Florence Tupin IEEE Transactions on Geoscience and Remote Sensing, vol. 56, no. 3, pp. 1626-1636, 2018 (IEEE Xplore, HAL) Reconstruction of elevation maps from a collection of SAR images obtained in interferometric configuration is a challenging task. Reconstruction methods must overcome two adverse effects: the strong interferometric noise that contaminates the data, and the 2π phase ambiguities. Interferometric noise requires some form of smoothing among pixels of identical height. Phase ambiguities can be solved, up to a point, by combining linkage to the neighbors and a global optimization strategy to prevent from being trapped in local minima. This paper introduces a reconstruction method, PARISAR, that achieves both a resolution-preserving denoising and a robust phase unwrapping by combining non-local denoising methods based on patch similarities and total-variation regularization. The optimization algorithm, based on graph-cuts, identifies the global optimum. We compare PARISAR with several other reconstruction methods both on numerical simulations and satellite images and show a qualitative and quantitative improvement over state-of-the-art reconstruction methods for multi-baseline SAR interferometry. |

Jérémie Bigot, Charles Deledalle, Delphine Féral Journal of Machine Learning Research, vol. 18, no. 137, pp. 1-50, 2017 (JMLR, ArXiv) Presented at ISNPS'2018, June, Salerno, Italy (slides) We consider the problem of estimating a low-rank signal matrix from noisy measurements under the assumption that the distribution of the data matrix belongs to an exponential family. In this setting, we derive generalized Stein's unbiased risk estimation (SURE) formulas that hold for any spectral estimators which shrink or threshold the singular values of the data matrix. This leads to new data-driven shrinkage rules, whose optimality is discussed using tools from random matrix theory and through numerical experiments. Our approach is compared to recent results on asymptotically optimal shrinking rules for Gaussian noise. It also leads to new procedures for singular values shrinkage in matrix denoising for Poisson-distributed or Gamma-distributed measurements. |

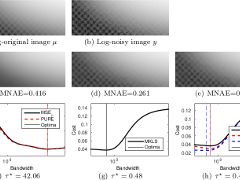

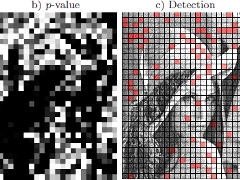

Charles-Alban Deledalle Electronic Journal of Statistics, vol. 11, no. 2, pp. 3141-3164, 2017 (Project Euclid, HAL, ArXiv) We address the question of estimating Kullback-Leibler losses rather than squared losses in recovery problems where the noise is distributed within the exponential family. We exhibit conditions under which these losses can be unbiasedly estimated or estimated with a controlled bias. Simulations on parameter selection problems in image denoising applications with Gamma and Poisson noises illustrate the interest of Kullback-Leibler losses and the proposed estimators. |

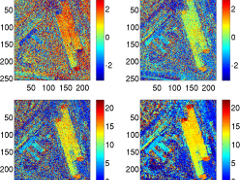

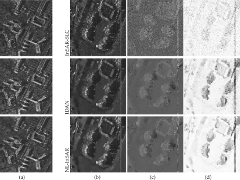

Charles-Alban Deledalle, Loïc Denis, Sonia Tabti, Florence Tupin IEEE Transactions on Image Processing, vol. 26, no. 9, pp. 4389-4403, 2017 (IEEE Xplore, recommended pdf, HAL) Speckle reduction is a longstanding topic in synthetic aperture radar (SAR) imaging. Since most current and planned SAR imaging satellites operate in polarimetric, interferometric or tomographic modes, SAR images are multi-channel and speckle reduction techniques must jointly process all channels to recover polarimetric and interferometric information. The distinctive nature of SAR signal (complex-valued, corrupted by multiplicative fluctuations) calls for the development of specialized methods for speckle reduction. Image denoising is a very active topic in image processing with a wide variety of approaches and many denoising algorithms available, almost always designed for additive Gaussian noise suppression. This paper proposes a general scheme, called MuLoG (MUlti-channel LOgarithm with Gaussian denoising), to include such Gaussian denoisers within a multi-channel SAR speckle reduction technique. A new family of speckle reduction algorithms can thus be obtained, benefiting from the ongoing progress in Gaussian denoising, and offering several speckle reduction results often displaying method-specific artifacts that can be dismissed by comparison between results. |

C-A. Deledalle, N. Papadakis, J. Salmon and S. Vaiter SIAM Journal on Imaging Sciences, vol. 10, no. 1, pp. 243-284, 2017 (epubs SIAM, HAL, ArXiv) In this paper, we propose a new framework to remove parts of the systematic errors affecting popular restoration algorithms, with a special focus for image processing tasks. Extending ideas that emerged for l1 regularization, we develop an approach that can help re-fitting the results of standard methods towards the input data. Total variation regularizations and non-local means are special cases of interest. We identify important covariant information that should be preserved by the re-fitting method, and emphasize the importance of preserving the Jacobian (w.r.t to the observed signal) of the original estimator. Then, we provide an approach that has a ``twicing'' flavor and allows re-fitting the restored signal by adding back a local affine transformation of the residual term. We illustrate the benefits of our method on numerical simulations for image restoration tasks. |

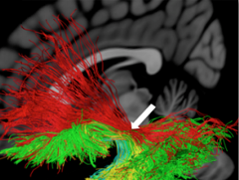

J. Hau, S. Sarubbo, J. C. Houde, F. Corsini, G. Girard, C. Deledalle, F. Crivello, L. Zago, E. Mellet, G. Jobard, M. Joliot, B. Mazoyer, N. Tzourio-Mazoyer, M. Descoteaux, L. Petit Brain Structure and Function, vol. 222, no. 4, pp. 1645-1662, 2017 (Springer Link, HAL) Despite its significant functional and clinical interest, the anatomy of the uncinate fasciculus (UF) has received little attention. It is known as a 'hook-shaped' fascicle connecting the frontal and anterior temporal lobes and is believed to consist of multiple subcomponents. But knowledge of its precise connectional anatomy in humans is lacking and its subcomponent divisions are unclear. In the present study we evaluate the anatomy of the UF and provide its detailed normative description in 30 healthy subjects with advanced particle-filtering tractography with anatomical priors and robustness to crossing fibers with constrained spherical deconvolution. We extracted the UF by defining its stem encompassing all streamlines that converge into a compact bundle, which consisted not only of the classic hook-shaped fibers but also straight horizontally oriented. We applied an automatic clustering method to subdivide the UF bundle and revealed five subcomponents in each hemisphere with distinct connectivity profiles, including different asymmetries. A layer-by-layer microdissection of the ventral part of the external and extreme capsules using Klingler's preparation also demonstrated five types of uncinate fibers that, according to their pattern, depth, and cortical terminations, were consistent with the diffusion-based UF subcomponents. The present results shed new light on the UF cortical terminations and its multicomponent internal organization with extended cortical connections within the frontal and temporal cortices. The different lateralization patterns we report within the UF subcomponents reconcile the conflicting asymmetry findings of the literature. Such results clarifying the UF structural anatomy lay the groundwork for more targeted investigations of its functional role, especially in semantic language processing. |

Samuel Vaiter, Charles-Alban Deledalle, Gabriel Peyré, Jalal M. Fadili, Charles Dossal Annals of the Institute of Statistical Mathematics, vol. 69, no. 4, pp. 791-832, 2017 (Springer Link, ArXiv) In this paper, we are concerned with regularized regression problems where the prior penalty is a piecewise regular/partly smooth gauge whose active manifold is linear. This encompasses as special cases the Lasso (l1 regularizer), the group Lasso (l1-l2 regularizer) and the l-infinite-norm regularizer penalties. This also includes so-called analysis-type priors, i.e. composition of the previously mentioned functionals with linear operators, a typical example being the total variation prior. We study the sensitivity of any regularized minimizer to perturbations of the observations and provide its precise local parameterization. Our main result shows that, when the observations are outside a set of zero Lebesgue measure, the predictor moves locally stably along the same linear space as the observations undergo small perturbations. This local stability is a consequence of the piecewise regularity of the gauge, which in turn plays a pivotal role to get a closed form expression for the variations of the predictor w.r.t. observations which holds almost everywhere. When the perturbation is random (with an appropriate continuous distribution), this allows us to derive an unbiased estimator of the degrees of freedom and of the risk of the estimator prediction. Our results hold true without placing any assumption on the design matrix, should it be full column rank or not. They generalize those already known in the literature such as the Lasso problem, the general Lasso problem (analysis l1-penalty), or the group Lasso where existing results for the latter assume that the design is full column rank. |

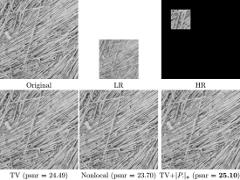

M. El Gheche, J.-F. Aujol, Y. Berthoumieu, C.-A. Deledalle IEEE Transactions on Image Processing, vol. 26, no. 2, pp. 549-560, 2017 (IEEE Xplore, HAL) In this paper, we aim at super-resolving a low-resolution texture under the assumption that a high-resolution patch of the texture is available. To do so, we propose a variational method that combines two approaches, that are texture synthesis and image reconstruction. The resulting objective function holds a nonconvex energy that involves a quadratic distance to the low-resolution image, a histogram-based distance to the high-resolution patch, and a nonlocal regularization that links the missing pixels with the patch pixels. As for the histogram-based measure, we use a sum of Wasserstein distances between the histograms of some linear transformations of the textures. The resulting optimization problem is efficiently solved with a primal-dual proximal method. Experiments show that our method leads to a significant improvement, both visually and numerically, with respect to state-of-the-art algorithms for solving similar problems. |

M. Hidane, M. El Gheche, J.-F. Aujol, Y. Berthoumieu and C. Deledalle IEEE Transactions on Image Processing, vol. 25, no. 8, pp. 3505-3517, 2016 (IEEE Xplore, HAL) We consider in this paper the problem of recovering a high-resolution image from a pair consisting of a complete low-resolution image and a high-resolution but incomplete one. We refer to this task as the image zoom completion problem. After discussing possible contexts in which an identical setting may arise, we study three regularization strategies, giving full details concerning the numerical optimization of the corresponding energies, and discussing the benefits and shortcomings of each method. We arrive at a stable and satisfactory solution by adopting a recent structure-texture decomposition model. |

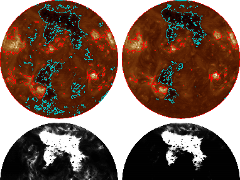

Ruben De Visscher, Véronique Delouille, Pierre Dupont and Charles-Alban Deledalle J. Space Weather Space Clim., vol. 5, 2015 (edp open) The Sun as seen by Extreme Ultraviolet (EUV) telescopes exhibits a variety of large-scale structures. Of particular interest for space-weather applications is the extraction of active regions (AR) and coronal holes (CH). The next generation of GOES-R satellites will provide continuous monitoring of the solar corona in six EUV bandpasses that are similar to the ones provided by the SDO-AIA EUV telescope since May 2010. Supervised segmentations of EUV images that are consistent with manual segmentations by for example space-weather forecasters help in extracting useful information from the raw data. We present a supervised segmentation method that is based on the Maximum A Posteriori rule. Our method allows integrating both manually segmented images as well as other type of information. It is applied on SDO-AIA images to segment them into AR, CH, and the remaining Quiet Sun (QS) part. A Bayesian classifier is applied on training masks provided by the user. The noise structure in EUV images is non-trivial, and this suggests the use of a non-parametric kernel density estimator to fit the intensity distribution within each class. Under the Naive Bayes assumption we can add information such as latitude distribution and total coverage of each class in a consistent manner. Those information can be prescribed by an expert or estimated with an Expectation-Maximization algorithm. The segmentation masks are in line with the training masks given as input and show consistency over time. Introduction of additional information besides pixel intensity improves upon the quality of the final segmentation. Such a tool can aid in building automated segmentations that are consistent with some ground truth defined by the users. |

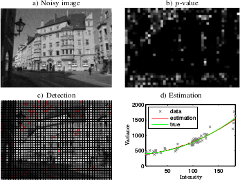

Camille Sutour, Charles-Alban Deledalle, Jean-François Aujol SIAM Journal on Imaging Sciences, vol. 8, no. 4, pp. 2622-2661, 2015 (HAL, epubs SIAM) We propose a two-step algorithm that automatically estimates the noise level function of stationary noise from a single image, i.e., the noise variance as a function of the image intensity. First, the image is divided into small square regions and a non-parametric test is applied to decide weather each region is homogeneous or not. Based on Kendall's τ coefficient (a rank-based measure of correlation), this detector has a non-detection rate independent on the unknown distribution of the noise, provided that it is at least spatially uncorrelated. Moreover, we prove on a toy example, that its overall detection error vanishes with respect to the region size as soon as the signal to noise ratio level is non-zero. Once homogeneous regions are detected, the noise level function is estimated as a second order polynomial minimizing the l |

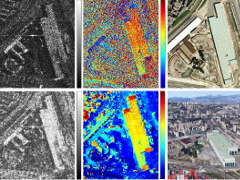

Camille Sutour, Jean-François Aujol, Charles-Alban Deledalle, Baudouin Denis de Senneville Journal of Mathematical Imaging and Vision, vol. 53, no. 2, pp. 131-150, 2015 (HAL, Springer Link) Multi-modal image sequence registration is a challenging problem that consists in aligning two image sequences of the same scene acquired with a different sensor, hence containing different characteristics. We focus in this paper on the registration of optical and infra-red image sequences acquired during the flight of a helicopter. Both cameras are located at different positions and they provide complementary informations. We propose a fast registration method based on the edge information: a new criterion is defined in order to take into account both the magnitude and the orientation of the edges of the images to register. We derive a robust technique based on a gradient ascent and combined with a reliability test in order to quickly determine the optimal transformation that matches the two image sequences. We show on real multi-modal data that our method outperforms classical registration methods, thanks to the shape information provided by the contours. Besides, results on synthetic images and real experimental conditions show that the proposed algorithm manages to find the optimal transformation in few iterations, achieving a rate of about 8 frames per second. |

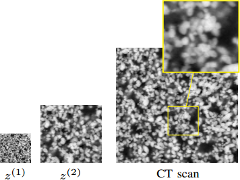

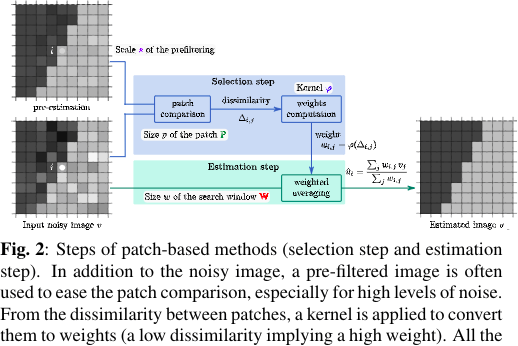

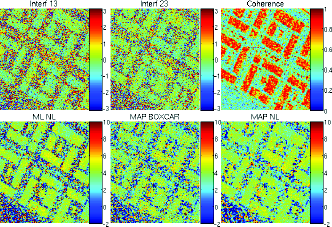

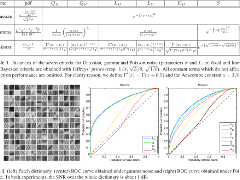

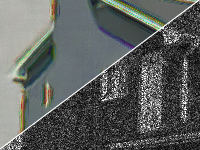

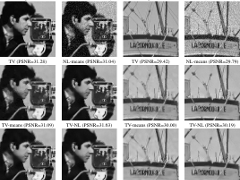

Charles-Alban Deledalle, Loïc Denis, Florence Tupin, Andreas Reigber and Marc Jäger IEEE Trans. on Geoscience and Remote Sensing, vol. 53, no. 4, pp. 2021-2038, 2015 (IEEE Xplore, HAL) IEEE GRSS 2016 TRANSACTIONS PRIZE PAPER AWARD Speckle noise is an inherent problem in coherent imaging systems like synthetic aperture radar. It creates strong intensity fluctuations and hampers the analysis of images and the estimation of local radiometric, polarimetric or interferometric properties. SAR processing chains thus often include a multi-looking (i.e., averaging) filter for speckle reduction, at the expense of a strong resolution loss. Preservation of point-like and fine structures and textures requires to locally adapt the estimation. Non-local means successfully adapt smoothing by deriving data-driven weights from the similarity between small image patches. The generalization of non-local approaches offers a flexible framework for resolution-preserving speckle reduction. We describe a general method, NL-SAR, that builds extended non-local neighborhoods for denoising amplitude, polarimetric and/or interferometric SAR images. These neighborhoods are defined on the basis of pixel similarity as evaluated by multi-channel comparison of patches. Several non-local estimations are performed and the best one is locally selected to form a single restored image with good preservation of radar structures and discontinuities. The proposed method is fully automatic and handles single and multi-look images, with or without interferometric or polarimetric channels. Efficient speckle reduction with very good resolution preservation is demonstrated both on numerical experiments using simulated data and airborne radar images. The source code of a parallel implementation of NL-SAR is released with the paper. |

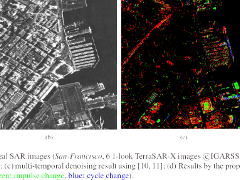

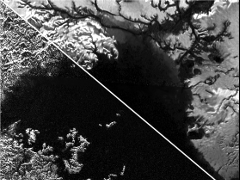

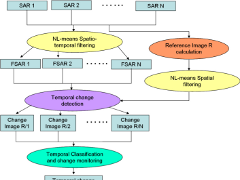

Xin Su, Charles-Alban Deledalle, Florence Tupin, Hong Sun ISPRS Journal of Photogrammetry and Remote Sensing, vol. 101, pp. 247-261, 2015 (HAL, ScienceDirect) This paper presents a likelihood ratio test based method of change detection and classification for synthetic aperture radar (SAR) time series, namely NORmalized Cut on chAnge criterion MAtrix (NORCAMA). This method involves three steps: 1) multi-temporal pre-denoising step over the whole image series to reduce the effect of the speckle noise; 2) likelihood ratio test based change criteria between two images using both the original noisy images and the denoised images; 3) change classification by a normalized cut based clustering-and-recognizing method on change criterion matrix (CCM). The experiments on both synthetic and real SAR image series show the effective performance of the proposed framework. |

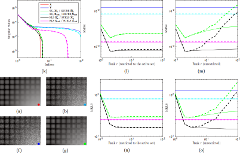

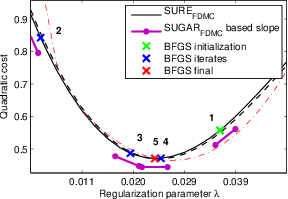

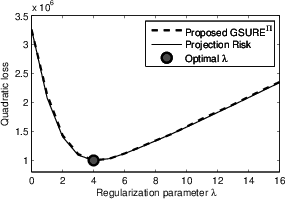

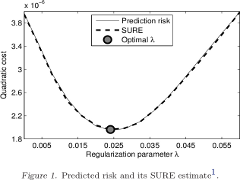

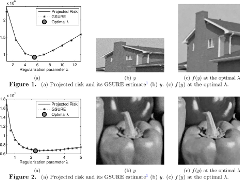

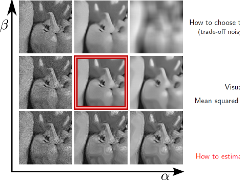

C.-A. Deledalle, S. Vaiter, J.-M. Fadili, G. Peyré SIAM Journal on Imaging Sciences, vol. 7., no. 4, pp. 2448-2487, 2014 (epubs SIAM, ArXiv) Algorithms to solve variational regularization of ill-posed inverse problems usually involve operators that depend on a collection of continuous parameters. When these operators enjoy some (local) regularity, these parameters can be selected using the so-called Stein Unbiased Risk Estimate (SURE). While this selection is usually performed by exhaustive search, we address in this work the problem of using the SURE to efficiently optimize for a collection of continuous parameters of the model. When considering non-smooth regularizers, such as the popular l1-norm corresponding to soft-thresholding mapping, the SURE is a discontinuous function of the parameters preventing the use of gradient descent optimization techniques. Instead, we focus on an approximation of the SURE based on finite differences as proposed in (Ramani et al., 2008). Under mild assumptions on the estimation mapping, we show that this approximation is a weakly differentiable function of the parameters and its weak gradient, coined the Stein Unbiased GrAdient estimator of the Risk (SUGAR), provides an asymptotically (with respect to the data dimension) unbiased estimate of the gradient of the risk. Moreover, in the particular case of soft-thresholding, the SUGAR is proved to be also a consistent estimator. The SUGAR can then be used as a basis to perform a quasi-Newton optimization. The computation of the SUGAR relies on the closed-form (weak) differentiation of the non-smooth function. We provide its expression for a large class of iterative proximal splitting methods and apply our strategy to regularizations involving non-smooth convex structured penalties. Illustrations on various image restoration and matrix completion problems are given. |

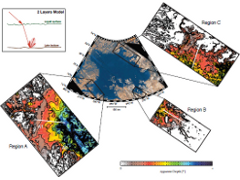

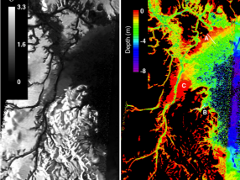

Lucas, A and Aharonson, O and Deledalle, CA and Hayes, AG and Kirk, RL and Howington-Kraus, A Journal of Geophysical Research - Planets, vol. 119, no. 10, pp. 2149-2166, 2014 (Wiley Online Library, HAL) The Cassini Synthetic Aperture Radar has been acquiring images of Titan's surface since October 2004. To date, 59% of Titan's surface has been imaged by radar, with significant regions imaged more than once. Radar data suffer from speckle noise hindering interpretation of small-scale features and comparison of re-imaged regions for change detection. We present here a new image analysis technique that combines a denoising algorithm with mapping and quantitative measurements that greatly enhances the utility of the data and offers previously unattainable insights. After validating the technique, we demonstrate the potential improvement in understanding of surface processes on Titan and defining global mapping units, focusing on specific landforms including lakes, dunes, mountains and fluvial features. Lake shorelines are delineated with greater accuracy. Previously unrecognized dissection by fluvial channels emerges beneath shallow methane cover. Dune wavelengths and inter-dune extents are more precisely measured. A significant refinement in producing DEM is also shown. Interactions of fluvial and aeolian processes with topographic relief is more precisely observed and understood than previously. Benches in bathymetry are observed in northern sea Ligeia Mare. Submerged valleys show similar depth suggesting that they are equilibrated with marine benches. These new observations suggest a liquid level increase. The liquid level increase in the north may be due to changes on seasonal or longer timescales. |

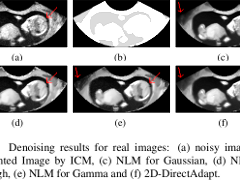

Camille Sutour, Charles-Alban Deledalle, Jean-François Aujol IEEE Trans. on Image Processing, vol. 23, no. 8, pp. 3506-3521, 2014 (IEEE Xplore, HAL) Image denoising is a central problem in image processing and it is often a necessary step prior to higher level analysis such as segmentation, reconstruction or super-resolution. The non-local means (NL-means) perform denoising by exploiting the natural redundancy of patterns inside an image; they perform a weighted average of pixels whose neighborhoods (patches) are close to each other. This reduces significantly the noise while preserving most of the image content. While it performs well on flat areas and textures, it suffers from two opposite drawbacks: it might over-smooth low-contrasted areas or leave a residual noise around edges and singular structures. Denoising can also be performed by total variation minimization -- the ROF model -- which leads to restore regular images, but it is prone to over-smooth textures, staircasing effects, and contrast losses. We introduce in this paper a variational approach that corrects the over-smoothing and reduces the residual noise of the NL-means by adaptively regularizing non-local methods with the total variation. The proposed regularized NL-means algorithm combines these methods and reduces both of their respective defaults by minimizing an adaptive total variation with a non-local data fidelity term. Besides, this model adapts to different noise statistics and a fast solution can be obtained in the general case of the exponential family. We develop this model for image denoising and we adapt it to video denoising with 3D patches. |

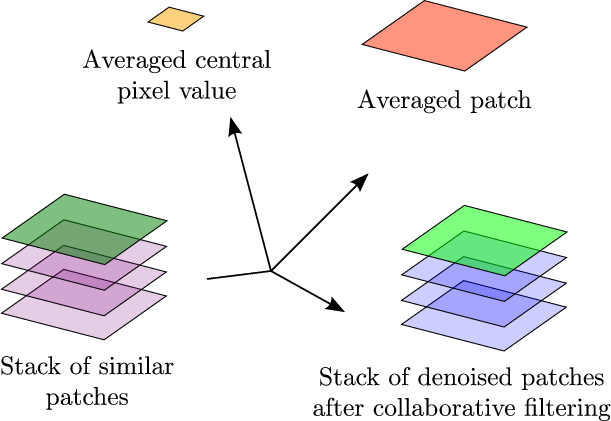

C. Deledalle, L. Denis, G. Poggi, F. Tupin, L. Verdoliva IEEE Signal Processing Magazine, vol. 31, no. 4, pp. 69-78, 2014 (IEEE Xplore, HAL) Most current SAR systems offer high-resolution images featuring polarimetric, interferometric, multi-frequency, multi-angle, or multi-date information. SAR images however suffer from strong fluctuations due to the speckle phenomenon inherent to coherent imagery. Hence, all derived parameters display strong signal-dependent variance, preventing the full exploitation of such a wealth of information. Even with the abundance of despeckling techniques proposed these last three decades, there is still a pressing need for new methods that can handle this variety of SAR products and efficiently eliminate speckle without sacrificing the spatial resolution. Recently, patch-based filtering has emerged as a highly successful concept in image processing. By exploiting the redundancy between similar patches, it succeeds in suppressing most of the noise with good preservation of texture and thin structures. Extensions of patch-based methods to speckle reduction and joint exploitation of multi-channel SAR images (interferometric, polarimetric, or PolInSAR data) have led to the best denoising performance in radar imaging to date. We give a comprehensive survey of patch-based nonlocal filtering of SAR images, focusing on the two main ingredients of the methods: measuring patch similarity, and estimating the parameters of interest from a collection of similar patches. |

Joseph Salmon, Zachary Harmany, Charles-Alban Deledalle, Rebecca Willett Journal of Mathematical Imaging and Vision, vol. 48, no. 2, pp. 279-297, 2014 (arXiv, SpringerLink) Photon-limited imaging, which arises in applications such as spectral imaging, night vision, nuclear medicine, and astronomy, occurs when the number of photons collected by a sensor is small relative to the desired image resolution. Typically a Poisson distribution is used to model these observations, and the inherent heteroscedasticity of the data combined with standard noise removal methods yields significant artifacts. This paper introduces a novel denoising algorithm for photon-limited images which combines elements of dictionary learning and sparse representations for image patches. The method employs both an adaptation of Principal Component Analysis (PCA) for Poisson noise and recently developed sparsity regularized convex optimization algorithms for photon-limited images. A comprehensive empirical evaluation of the proposed method helps characterize the performance of this approach relative to other state-of-the-art denoising methods. The results reveal that, despite its simplicity, PCA-flavored denoising appears to be highly competitive in very low light regimes. |

Xin Su, Charles-Alban Deledalle, Florence Tupin, Hong Sun IEEE Transactions on Geoscience and Remote Sensing, vol. 52, no. 10, pp. 6181-6196, 2014 (IEEE Xplore) This paper presents a denoising approach for multitemporal synthetic aperture radar (SAR) images based on the concept of nonlocal means (NLM). It exploits the information redundancy existing in multitemporal images by a two-step strategy. The first step realizes a nonlocal weighted estimation driven by the redundancy in time, whereas the second step makes use of the nonlocal estimation in space. Using patch similarity miss-registration estimation, we also adapted this approach to the case of unregistered SAR images. The experiments illustrate the efficiency of the proposed method to denoise multitemporal images while preserving new information. |

Samuel Vaiter, Charles-Alban Deledalle, Gabriel Peyré, Charles Dossal, Jalal Fadili Applied and Computational Harmonic Analysis, vol. 35, no. 3, pp. 433-451, 2013 (HAL, Elsevier) In this paper, we aim at recovering an unknown signal x0 from noisy L1measurements y=Phi*x0+w, where Phi is an ill-conditioned or singular linear operator and w accounts for some noise. To regularize such an ill-posed inverse problem, we impose an analysis sparsity prior. More precisely, the recovery is cast as a convex optimization program where the objective is the sum of a quadratic data fidelity term and a regularization term formed of the L1-norm of the correlations between the sought after signal and atoms in a given (generally overcomplete) dictionary. The L1-sparsity analysis prior is weighted by a regularization parameter lambda>0. In this paper, we prove that any minimizers of this problem is a piecewise-affine function of the observations y and the regularization parameter lambda. As a byproduct, we exploit these properties to get an objectively guided choice of lambda. In particular, we develop an extension of the Generalized Stein Unbiased Risk Estimator (GSURE) and show that it is an unbiased and reliable estimator of an appropriately defined risk. The latter encompasses special cases such as the prediction risk, the projection risk and the estimation risk. We apply these risk estimators to the special case of L1-sparsity analysis regularization. We also discuss implementation issues and propose fast algorithms to solve the L1 analysis minimization problem and to compute the associated GSURE. We finally illustrate the applicability of our framework to parameter(s) selection on several imaging problems. |

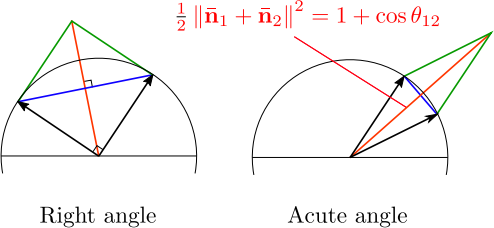

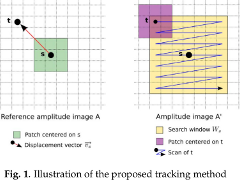

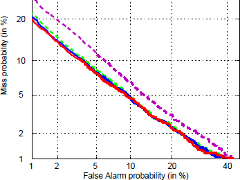

Charles-Alban Deledalle, Loïc Denis and Florence Tupin International Journal of Computer Vision, vol. 99, no. 1, pp. 86-102, 2012 (HAL-TEL, SpringerLink, bibtex) Many tasks in computer vision require to match image parts. While higher-level methods consider image features such as edges or robust descriptors, low-level approaches (so-called image-based) compare groups of pixels (patches) and provide dense matching. Patch similarity is a key ingredient to many techniques for image registration, stereo-vision, change detection or denoising. Recent progress in natural image modeling also makes intensive use of patch comparison. A fundamental difficulty when comparing two patches from "real" data is to decide whether the differences should be ascribed to noise or intrinsic dissimilarity. Gaussian noise assumption leads to the classical definition of patch similarity based on the squared differences of intensities. For the case where noise departs from the Gaussian distribution, several similarity criteria have been proposed in the literature of image processing, detection theory and machine learning. By expressing patch (dis)similarity as a detection test under a given noise model, we introduce these criteria with a new one and discuss their properties. We then assess their performance for different tasks: patch discrimination, image denoising, stereo-matching and motion-tracking under gamma and Poisson noises. The proposed criterion based on the generalized likelihood ratio is shown to be both easy to derive and powerful in these diverse applications. |

Charles-Alban Deledalle, Vincent Duval and Joseph Salmon Journal of Mathematical Imaging and Vision, pp. 1-18, 2011 (SpringerLink, bibtex) We propose in this paper an extension of the Non-Local Means (NL-Means) denoising algorithm. The idea is to replace the usual square patches used to compare pixel neighborhoods with various shapes that can take advantage of the local geometry of the image. We provide a fast algorithm to compute the NL-Means with arbitrary shapes thanks to the Fast Fourier Transform. We then consider local combinations of the estimators associated with various shapes by using Stein's Unbiased Risk Estimate (SURE). Experimental results show that this algorithm improve the standard NL-Means performance and is close to state-of-the-art methods, both in terms of visual quality and numerical results. Moreover, common visual artifacts usually observed by denoising with NL-Means are reduced or suppressed thanks to our approach. |

Charles-Alban Deledalle, Loïc Denis and Florence Tupin IEEE Trans. on Geoscience and Remote Sensing, vol. 49, no. 4, pp. 1441-1452, April 2011 (IEEE Xplore, pdf, bibtex) Interferometric synthetic aperture radar (InSAR) data provides reflectivity, interferometric phase and coherence images, which are paramount to scene interpretation or low-level processing tasks such as segmentation and 3D reconstruction. These images are estimated in practice from hermitian product on local windows. These windows lead to biases and resolution losses due to local heterogeneity caused by edges and textures. This paper proposes a non-local approach for the joint estimation of the reflectivity, the interferometric phase and the coherence images from an interferometric pair of co-registered single-look complex (SLC) SAR images. Non-local techniques are known to efficiently reduce noise while preserving structures by performing a weighted averaging of similar pixels. Two pixels are considered similar if the surrounding image patches are "resembling". Patch-similarity is usually defined as the Euclidean distance between the vectors of graylevels. In this paper a statistically grounded patch-similarity criterion suitable to SLC images is derived. A weighted maximum likelihood estimation of the SAR interferogram is then computed with weights derived in a data-driven way. Weights are defined from intensity and interferometric phase, and are iteratively refined based both on the similarity between noisy patches and on the similarity of patches from the previous estimate. The efficiency of this new interferogram construction technique is illustrated both qualitatively and quantitatively on synthetic and true data. |

Charles-Alban Deledalle, Loïc Denis and Florence Tupin IEEE Trans. on Image Processing, vol. 18, no. 12, pp. 2661-2672, December 2009 (IEEE Xplore, pdf, bibtex) Image denoising is an important problem in image processing since noise may interfere with visual or automatic interpretation. This paper presents a new approach for image denoising in the case of a known uncorrelated noise model. The proposed filter is an extension of the Non Local means (NL means) algorithm introduced by Buades et al. [1], which performs a weighted average of the values of similar pixels. Pixel similarity is defined in NL means as the Euclidean distance between patches (rectangular windows centered on each two pixels). In this paper a more general and statistically grounded similarity criterion is proposed which depends on the noise distribution model. The denoising process is expressed as a weighted maximum likelihood estimation problem where the weights are derived in a data-driven way. These weights can be iteratively refined based on both the similarity between noisy patches and the similarity of patches extracted from the previous estimate. We show that this iterative process noticeably improves the denoising performance, especially in the case of low signal-to-noise ratio images such as Synthetic Aperture Radar (SAR) images. Numerical experiments illustrate that the technique can be successfully applied to the classical case of additive Gaussian noise but also to cases such as multiplicative speckle noise. The proposed denoising technique seems to improve on the state of the art performance in that latter case. |

International conference and workshop papers (56)

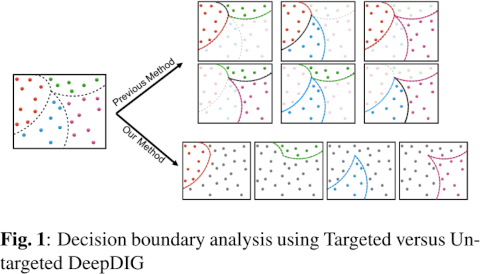

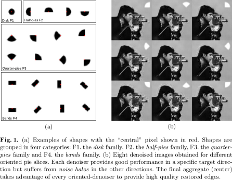

Jane Berk, Martin Jaszewski, Charles Alban-Deledalle and Shibin Parameswaran ICIP 2022, Bordeaux, France, Oct 2022 For more than a decade, deep learning algorithms have consistently achieved and improved upon the state-of-the-art per-formance on image classification tasks. However, there is a general lack of understanding and knowledge about the decision boundaries carved by these modern deep neural network architectures. Recently, an algorithm called DeepDIG was introduced to generate boundary instances between two classes based on the decision regions defined by any deep neural network classifier. Although it is very effective in generating boundary instances, the underlying algorithm was designed to work with two classes at a time in a non-commutative fashion which makes it ill-suited for multi-class problems with hundreds of classes. In this work, we extend the DeepDIG algorithm so that it scales linearly with the number of classes. We show that the proposed U-DeepDIG algorithm maintains the efficacy of the original DeepDIG algorithm while being scalable and more efficient when applied to larger classification problems. We demonstrate this by applying our algorithm on MNIST, Fashion-MNIST and CIFAR10 datasets. In addition to qualitative comparisons, we also perform extensive quantitative comparison by analyzing the margin between the class boundaries and the instances generated. |

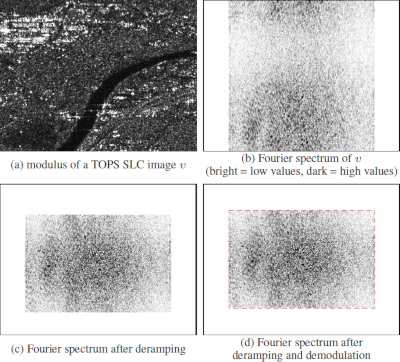

Rémy Abergel, Loïc Denis, Florence Tupin, Saïd Ladjal, Charles-Alban Deledalle, Andrés Almansa IGARSS 2019, Yokohama, Japan, July 2019 Speckle reduction is a necessary step for many applications. Very effective methods have been developed in the recent years for singleimage speckle reduction and multi-temporal speckle filtering. To reduce the presence of sidelobes around bright targets, SAR images are spectrally weighted. This processing impacts the speckle statistics by introducing spatial correlations. These correlations severely impact speckle reduction methods that require uncorrelated speckle as input. Spatial down-sampling is thus typically applied to reduce the speckle spatial correlations prior to speckle filtering. To better preserve the spatial resolution, we describe how to correctly resample Sentinel-1 images and extract bright targets in order to process fullresolution images with speckle-reduction methods. Illustrations are given both for single-image despeckling and spatio-temporal speckle filtering. |

Charles-Alban Deledalle, Loïc Denis, Laurent Ferro-Famil, Jean-Marie Nicolas, Florence Tupin IGARSS 2019, Yokohama, Japan, July 2019 The availability of multi-temporal stacks of SAR images opens the way to new speckle reduction methods. Beyond mere spatial filtering, the time series can be used to improve the signal-to-noise ratio of structures that persist for several dates. Among multi-temporal filtering strategies to reduce speckle fluctuations, a recent approach has proved to be very effective: ratio-based filtering (RABASAR). This method, developed to reduce the speckle in multi-temporal intensity images, first computes a "mean image" with a high signal-tonoise ratio (a so-called super-image), and then processes the ratio between the multi-temporal stack and the super-image. In this paper, we propose an extension of this approach to polarimetric SAR images. We illustrate its potential on a stack of fully-polarimetric images from RADARSAT-2 satellite. |

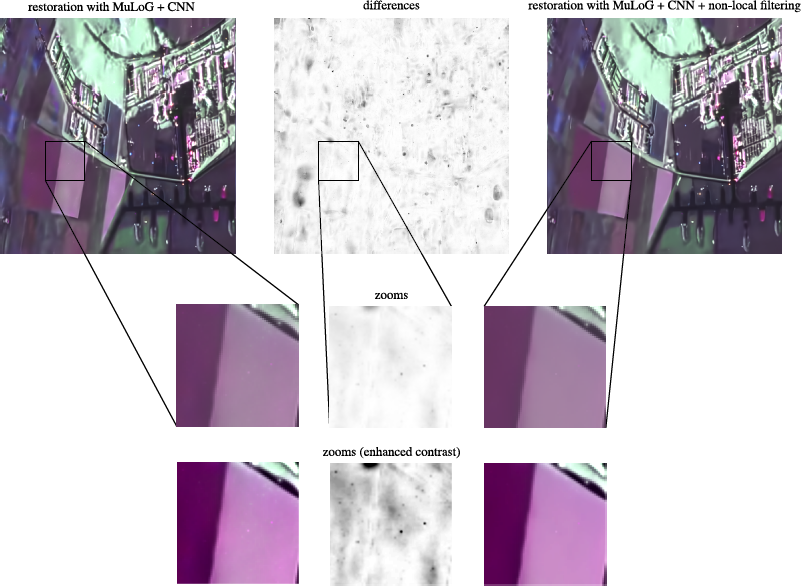

Loïc Denis, Charles-Alban Deledalle, Florence Tupin IGARSS 2019, Yokohama, Japan, July 2019 Speckle reduction has benefited from recent progress in image processing, in particular patch-based non-local filtering and deep learning techniques. These two family of methods offer complementary characteristics but have not yet been combined. We explore strategies to make the most of each approach. |

Florence Tupin, Loïc Denis, Charles-Alban Deledalle, Giampaolo Ferraioli IGARSS 2019, Yokohama, Japan, July 2019 Speckle reduction is a major issue for many SAR imaging applica-tions using amplitude, interferometric, polarimetric or tomographicdata. This subject has been widely investigated using various ap-proaches. Since a decade, breakthrough methods based on patcheshave brought unprecedented results to improve the estimation ofradar properties. In this paper, we give a review of the different adap-tations which have been proposed in the past years for different SARmodalities (mono-channel data like intensity images, multi-channeldata like interferometric, tomographic or polarimetric data, or multi-modalities combining optic and SAR images), and discuss the newtrends on this subject. |

Giampaolo Ferraioli, Loïc Denis, Charles-Alban Deledalle, Florence Tupin IGARSS 2019, Yokohama, Japan, July 2019 In the last decades, several approaches for solving the Phase Unwrapping (PhU) problem using multi-channel Interferometric Synthetic Aperture Radar (InSAR) data have been developed. Many of the proposed approaches are based on statistical estimation theory, both classical and Bayesian. In particular, the statistical approaches based on the use of the whole complex multi-channel dataset have turned to be effective. The latter are based on the exploitation of the covariance matrix, which contains the parameters of interest. In this paper, the added value of the Non Local (NL) paradigm within the InSAR multi-channel PhU framework is investigated. The analysis of the impact of NL technique is performed using multi-channel realistic simulated data and X-band data. |

Charles-Alban Deledalle, Nicolas Papadakis, Joseph Salmon, and Samuel Vaiter SSVM 2019, Hofgeismar, Germany, July 2019 (HAL, ArXiv) In inverse problems, the use of an l12 analysis regularizer induces a bias in the estimated solution. We propose a general refitting framework for removing this artifact while keeping information of interest contained in the biased solution. This is done through the use of refitting block penalties that only act on the co-support of the estimation. Based on an analysis of related works in the literature, we propose a new penalty that is well suited for refitting purposes. We also present an efficient algorithmic method to obtain the refitted solution along with the original (biased) solution for any convex refitting block penalty. Experiments illustrate the good behavior of the proposed block penalty for refitting. |

Brendan Le Bouill, Jean-Francois Aujol, Yannick Berthoumieu and Charles-Alban Deledalle ISIVC 2018, Rabat, Morocco, Nov. 2018 Most of the recent top-ranked stereovision algorithms are based on some oversegmentation of the stereo pair images. Each image segment is modelled as a 3D plane, transposing the stereovision problem into a 3-parameters plane estimation problem. Trying to find the set of planes that best fits the input data while regularising on 3D adjacent planes, a global energy is minimised using different optimisation strategies. The regularisation term is important as it enforces the reconstructed scene consistency. In this paper, we propose a disparity map refinement strategy based on a triangular mesh of the image domain. This mesh is projected along the rays of the camera, each triangle being modelled by a slanted plane. Considering triangles instead of free form superpixels, i.e. adding a geometrical constraint on the shape of segment boundaries, allows to formulate simpler regularisation terms that only involve the mesh vertex disparities in the computations. Different curvature regularisation terms of the literature are explored and a new one is proposed, with better properties with regard to the usual coplanarity assumption between adjacent planes. We demonstrate the performance gain of the proposed method compared to the popular Semi-Global Matching (SGM) algorithm. |

Charles Deledalle, Loïc Denis, Florence Tupin IGARSS 2018, July, Valencia, Spain, July 2018 (HAL) Speckle reduction is a long-standing topic in SAR data processing. Continuous progress made in the field of image denoising fuels the development of methods dedicated to speckle in SAR images. Adaptation of a denoising technique to the specific statistical nature of speckle presents variable levels of difficulty. It is well known that the logarithm transform maps the intrinsically multiplicative speckle into an additive and stationary component, thereby paving the way to the application of general-purpose image denoising methods to SAR intensity images. Multi-channel SAR images such as obtained in interferometric (InSAR) or polarimetric (PolSAR) configurations are much more challenging. This paper describes MuLoG, a generic approach for mapping a multi-channel SAR image into real-valued images with an additive speckle component that has a variance approximately constant. With this approach, general-purpose image denoising algorithms can be readily applied to restore InSAR or PolSAR data. In particular, we show how recent denoising methods based on deep convolutional neural networks lead to state-of-the art results when embedded with MuLoG framework. |

Weiying Zhao, Charles-Alban Deledalle, Loic Denis, Henri Maitre, Jean-Marie Nicolas, Florence Tupin IGARSS 2018, July, Valencia, Spain, July 2018 (HAL) In this paper, a generic method is proposed to reduce speckle in multi-temporal stacks of SAR images. The method is based on the computation of a "super-image", with a large number of looks, by temporal averaging. Then, ratio images are formed by dividing each image of the multi-temporal stack by the "super-image". In the absence of changes of the radiometry, the only temporal fluctuations of the intensity at a given spatial location are due to the speckle phenomenon. In areas affected by temporal changes, fluctuations cannot be ascribed to speckle only but also to radiometric changes. The overall effect of the division by the "super-image" is to improve the spatial stationarity: ratio images are much more homogeneous than the original images. Therefore, filtering these ratio images with a speckle-reduction method is more effective, in terms of speckle suppression, than filtering the original multi-temporal stack. After denoising of the ratio image, the despeckled multi-temporal stack is obtained by multiplication with the "super-image". Results are presented and analyzed both on synthetic and real SAR data and show the interest of the proposed approach. |

Charles Deledalle, Loïc Denis, Florence Tupin, Sylvain Lobry EUSAR 2018, Aachen, Germany, June 2018 (VDE Verlag) Due to speckle phenomenon, some form of filtering must be applied to SAR data prior to performing any polarimetric analysis. Beyond the simple multilooking operation (i.e., moving average), several methods have been designed specifically for PolSAR filtering. The specifics of speckle noise and the correlations between polarimetric channels make PolSAR filtering more challenging than usual image restoration problems. Despite their striking performance, existing image denoising algorithms, mostly designed for additive white Gaussian noise, cannot be directly applied to PolSAR data. We bridge this gap with MuLoG by providing a general scheme that stabilizes the variance of the polarimetric channels and that can embed almost any Gaussian denoiser. We describe MuLoG approach and illustrate its performance on airborne PolSAR data using a very recent Gaussian denoiser based on a convolutional neural network. |

Fabien Pierre, Jean-François Aujol, Charles-Alban Deledalle, Nicolas Papadakis EMMCVPR 2017, Venice, Italy, October 2017 (Springer Link, HAL) This paper focuses on the denoising of chrominance channels of color images. We propose a variational framework involving TV regularization that modifies the chrominance channel while preserving the input luminance of the image. The main issue of such a problem is to ensure that the denoised chrominance together with the original luminance belong to the RGB space after color format conversion. Standard methods of the literature simply truncate the converted RGB values, which lead to a change of hue in the denoised image. In order to tackle this issue, a "RGB compatible" chrominance range is defined on each pixel with respect to the input luminance. An algorithm to compute the orthogonal projection onto such a set is then introduced. Next, we propose to extend the CLEAR debiasing technique to avoid the loss of colourfulness produced by TV regularization. The benefits of our approach with respect to state-of-the-art methods are illustrated on several experiments. |

Clément Rambour, Loïc Denis, Florence Tupin, Jean-Marie Nicolas, Hélène Oriot, Laurent Ferro-Famil, Charles Deledalle IGARSS 2017, Fort Worth, Texas, USA, July 2017 (IEEE Xplore, HAL) Starting from a stack of co-registered SAR images in interferometric configuration, SAR tomography performs a reconstruction of the reflectivity of scatterers in 3-D. Several scatterers observed within the same resolution cell of each SAR image can be separated by jointly unmixing the SAR complex amplitude observed throughout the stack. To achieve a reliable tomographic reconstruction, it is necessary to estimate locally the SAR covariance matrix by performing some spatial averaging. This necessary averaging step introduces some resolution loss and can bias the tomographic reconstruction by mistakenly including the response of scatterers located within the averaging area but outside the resolution cell of interest. This paper addresses the problem of identifying pixels corresponding to similar tomographic content, i.e., pixels that can be safely averaged prior to tomographic reconstruction. We derive a similarity criterion adapted to SAR tomography and compare its performance with existing criteria on a stack Spotlight TerraSAR-X images. |

Shibin Parameswaran, Enming Luo, Charles-Alban Deledalle, Truong Nguyen NTIRE 2017, CVPR workshop, Honolulu, Hawaii, July 2017 (CVPR'17 Open Access, slides) We introduce a new external denoising algorithm that utilizes pre-learned transformations to accelerate filter calculations during runtime. The proposed fast external denoising (FED) algorithm shares characteristics of the powerful Targeted Image Denoising (TID) and Expected Patch Log-Likelihood (EPLL). By moving computationally demanding steps to an offline learning stage, the proposed approach aims to find a balance between processing speed and obtaining high quality denoising estimates. We evaluate FED on three datasets with targeted databases (text, face and license plates) and also on a set of generic images without a targeted database. We show that, like TID, the proposed approach is extremely effective when the transformations are learned using a targeted database. We also demonstrate that FED converges to competitive solutions faster than EPLL and is orders of magnitude faster than TID while providing comparable denoising performance. |

Charles-Alban Deledalle, Nicolas Papadakis, Joseph Salmon, Samuel Vaiter SPARS 2017, Lisbon, Portugal, June 2017 (ArXiv, HAL, poster) We focus on the maximum regularization parameter for anisotropic total-variation denoising. It corresponds to the minimum value of the regularization parameter above which the solution remains constant. While this value is well know for the Lasso, such a critical value has not been investigated in details for the total-variation. Though, it is of importance when tuning the regularization parameter as it allows fixing an upper-bound on the grid for which the optimal parameter is sought. We establish a closed form expression for the one-dimensional case, as well as an upper-bound for the two-dimensional case, that appears reasonably tight in practice. This problem is directly linked to the computation of the pseudo-inverse of the divergence, which can be quickly obtained by performing convolutions in the Fourier domain. |

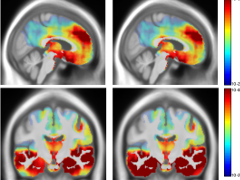

Pierrick Coupé, Charles-Alban Deledalle, Charles Dossal, Michèle Allard, and the Alzheimer's Disease Neuroimaging Initiative MICCAI, Workshop PatchMI, Athens, Greece, 2016 (Springer Link, HAL) The detection of brain alterations is crucial for understanding pathophysiological or neurodegenerative processes. The Voxel-Based Morphometry (VBM) is one of the most popular methods to achieve this task. Based on the comparison of local averages of tissue densities, VBM has been used in a large number of studies. Despite its numerous advantages, VBM is based on a highly reduced representation of the local brain anatomy since complex anatomical patterns are reduced to local averages of tissue probabilities. In this paper, we propose a new framework called Sparse-Based Morphometry (SBM) to better represent local brain anatomies. The presented patch-based approach uses dictionary learning to detect anatomical pattern modifications based on their shape and geometry. In our validation, the impact of the patch and group sizes is evaluated. Moreover, the sensitivity of SBM along Alzheimer's Disease (AD) progression is compared to VBM. Our results indicate that SBM is more sensitive than VBM on small groups and to detect early anatomical modifications caused by AD. |

C. Sutour, C-A. Deledalle, and J-F. Aujol EUSIPCO, Budapest, Hungary, August 2016 (IEEE Xplore, HAL) Image denoising is a fundamental problem in image processing and many powerful algorithms have been developed. However, they often rely on the knowledge of the noise distri- bution and its parameters. We propose a fully blind denoising method that first estimates the noise level function then uses this estimation for automatic denoising. First we perform the non- parametric detection of homogeneous image regions in order to compute a scatterplot of the noise statistics, then we estimate the noise level function with the least absolute deviation estimator. The noise level function parameters are then directly re-injected into an adaptive denoising algorithm based on the non-local means with no prior model fitting. Results show the performance of the noise estimation and denoising methods, and we provide a robust blind denoising tool. |

M. El Gheche, J-F. Aujol, Y. Berthoumieu, C.A. Deledalle and R. Fablet IEEE IVMSP, Bordeaux, France, July 2016 (IEEE Xplore, pdf). In this paper, we aim at synthesizing a texture from a highresolution patch and a low-resolution image. To do so, we solve a nonconvex optimization problem that involves a statistical prior and a Fourier spectrum constraint. The numerical analysis shows that the proposed approach achieves better results (in terms of visual quality) than state-of-the-art methods tailored to super-resolution or texture synthesis. |

Charles-Alban Deledalle, Loic Denis, Giampaolo Ferraioli, Florence Tupin IGARSS, Milan, Italy, July 2015 (IEEE Xplore, HAL) In this paper we propose a new approach for height retrieval using multi-channel SAR interferometry. It combines patch-based estimation and total variation regularization to provide a regularized height estimate. The non-local likelihood term adaptation relies on NL-SAR method, and the global optimization is realized through graph-cut minimization. The method is evaluated both with synthetic and real experiments. |

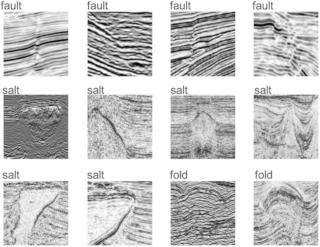

Sonia Tabti, Charles-Alban Deledalle, Loïc Denis, Florence Tupin IGARSS, Milan, Italy, July 2015 (IEEE Xplore, HAL) Due to their coherent nature, SAR (Synthetic Aperture Radar) images are very different from optical satellite images and more difficult to interpret, especially because of speckle noise. Given the increasing amount of available SAR data, efficient image processing techniques are needed to ease the analysis. Classifying this type of images, i.e., selecting an adequate label for each pixel, is a challenging task. This paper describes a supervised classification method based on local features derived from a Gaussian mixture model (GMM) of the distribution of patches. First classification results are encouraging and suggest an interesting potential of the GMM model for SAR imaging. |

Charles-Alban Deledalle, Nicolas Papadakis, Joseph Salmon SPARS, Cambridge, England, July 2015 (HAL, poster) Restoration of a piece-wise constant signal can be performed using anisotropic Total-Variation (TV) regularization. Anisotropic TV may capture well discontinuities but suffers from a systematic loss of contrast. This contrast can be re-enhanced in a post-processing step known as least-square refitting. We propose here to jointly estimate the refitting during the Douglas-Rachford iterations used to produce the original TV result. Numerical simulations show that our technique is more robust than the naive post-processing one. |

Charles-Alban Deledalle, Nicolas Papadakis, Joseph Salmon Scale Space and Variational Methods in Computer Vision 2015, May 2015, Lège Cap Ferret, France (Springer Link, ArXiv) Bias in image restoration algorithms can hamper further analysis, typically when the intensities have a physical meaning of interest , e.g., in medical imaging. We propose to suppress a part of the bias -- the method bias -- while leaving unchanged the other unavoidable part -- the model bias. Our debiasing technique can be used for any locally affine estimator including l1 regularization, anisotropic total-variation and some nonlocal filters. results. |

Denis H. P. Salvadeo, Florence Tupin, Isabelle Bloch, Nelson D. A. Mascarenhas, Alexandre L. M. Levada, Charles-Alban Deledalle, Sonia Dahdouh In the proceedings of ICIP, Paris, France, October 2014 (IEEE Xplore) In this paper, an extension of the framework proposed by Deledalle et al.[1] for Non Local Means (NLM) method is proposed. This extension is a general adaptive method to denoise images containing multiple noises. It takes into account a segmentation stage that indicates the noise type of a given pixel in order to select the similarity measure and suitable parameters to perform the denoising task, considering a certain patch on the image. For instance, it has been experimentally observed that fetal 3D ultrasound images are corrupted by different types of noise, depending on the tissue. Finally, the proposed method is applied to denoise these images, showing very good results. |

Moncef Hidane, Jean-François Aujol, Yannick Berthoumieu, Charles-Alban Deledalle In the proceedings of ICIP, Paris, France, October 2014 (IEEE Xplore, HAL) The classical super-resolution (SR) setting starts with a set of low-resolution (LR) images related by subpixel shifts and tries to reconstruct a single high-resolution (HR) image. In some cases, partial observations about the HR image are also available. Trying to complete the missing HR data without any reference to LR ones is an inpainting (or completion) problem. In this paper, we consider the problem of recovering a single HR image from a pair consisting of a complete LR and incomplete HR image pair. This setting arises in particular when one wants to fuse image data captured at two different resolutions. We propose an efficient algorithm that allows to take advantage of both image data by first learning nonlocal interactions from an interpolated version of the LR image using patches. Those interactions are then used by a convex energy function whose minimization yields a superresolved complete image. |

Sonia Tabti, Charles-Alban Deledalle, Loïc Denis, Florence Tupin In the proceedings of ICIP, Paris, France, October 2014 (IEEE Xplore, HAL) Patches have proven to be very effective features to model natural images and to design image restoration methods. Given the huge diversity of patches found in images, modeling the distribution of patches is a difficult task. Rather than attempting to accurately model all patches of the image, we advocate that it is sufficient that all pixels of the image belong to at least one well-explained patch. An image is thus described as a tiling of patches that have large prior probability. In contrast to most patch-based approaches, we do not process the image in patch space, and consider instead that patches should match well everywhere where they overlap. In-order to apply this modeling to the restoration of SAR images, we define a suitable data-fitting term to account for the statistical distribution of speckle. Restoration results are competitive with state-of-the art SAR despeckling methods. |

Camille Sutour, Jean-François Aujol, Charles-Alban Deledalle, Jean-Philippe Domenger In the proceedings of ICIP, Paris, France, October 2014 (IEEE Xplore, HAL) We derive a denoising method based on an adaptive regularization of the non-local means. The NL-means reduce noise by using the redundancy in natural images. They compute a weighted average of pixels whose surroundings are close. This method performs well but it suffers from residual noise on singular structures. We use the weights computed in the NL-means as a measure of performance of the denoising process. These weights balance the data-fidelity term in an adapted ROF model, in order to locally perform adaptive TV regularization. Besides, this model can be adapted to different noise statistics and a fast resolution can be computed in the general case of the exponential family. We adapt this model to video denoising by using spatio-temporal patches. Compared to spatial patches, they offer better temporal stability, while the adaptive TV regularization corrects the residual noise observed around moving structures. |

Xin Su, Charles-Alban Deledalle, Florence Tupin, Hung Sun In the proceedings of IGARSS, Québec, Canada, July 2014 (IEEE Xplore) This paper presents a change detection and classification method of Synthetic Aperture Radar (SAR) multi-temporal images. The change criterion based on a generalized likelihood ratio test is an extension of the likelihood ratio test, in which both the noisy data and the multi-temporal denoised data are used. The changes are detected by a thresholding and then classified into step, impulse and cycle changes according to their temporal behaviors. The results show the effective performance of the proposed method. |

Sonia Tabti, Charles-Alban Deledalle, Loïc Denis, Florence Tupin In the proceedings of IGARSS, Québec, Canada, July 2014 (IEEE Xplore, HAL) Adding invariance properties to a dictionary-based model is a convenient way to reach a high representation capacity while maintaining a compact structure. Compact dictionaries of patches are desirable because they ease semantic interpretation of their elements (atoms) and offer robust decompositions even under strong speckle fluctuations. This paper describes how patches of a dictionary can be matched to a speckled image by accounting for unknown shifts and affine radiometric changes. This procedure is used to build dictionaries of patches specific to SAR images. The dictionaries can then be used for denoising or classification purposes. |

Charles-Alban Deledalle, Loïc Denis, Florence Tupin EUSIPCO, Marrakech, Morroco, September 2013 (IEEE Xplore, HAL, poster) Matching patches from a noisy image to atoms in a dictionary of patches is a key ingredient to many techniques in image processing and computer vision. By representing with a single atom all patches that are identical up to a radiometric transformation, dictionary size can be kept small, thereby retaining good computational efficiency. Identification of the atom in best match with a given noisy patch then requires a contrast-invariant criterion. In the light of detection theory, we propose a new criterion that ensures contrast invariance and robustness to noise. We discuss its theoretical grounding and assess its performance under Gaussian, gamma and Poisson noises. |

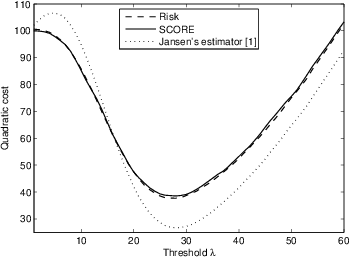

Jalal Fadili, Gabriel Peyré, Samuel Vaiter, Charles Deledalle, Joseph Salmon SPARS, Lausanne, Switzerland, July 2013 (HAL) In this paper, we investigate in a unified way the structural properties of solutions to inverse problems regularized by the generic class of semi-norms defined as a decomposable norm composed with a linear operator, the so-called analysis decomposable prior. This encompasses several well-known analysis-type regularizations such as the discrete total variation, analysis group-Lasso or the nuclear norm. Our main results establish sufficient conditions under which uniqueness and stability to a bounded noise of the regularized solution are guaranteed. |

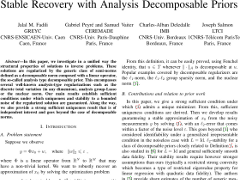

Charles-Alban Deledalle, Gabriel Peyré, Jalal Fadili SPARS, Lausanne, Switzerland, July 2013 (HAL, poster) In this work, we construct a risk estimator for hard thresholding which can be used as a basis to solve the difficult task of automatically selecting the threshold. As hard thresholding is not even continuous, Stein's lemma cannot be used to get an unbiased estimator of degrees of freedom, hence of the risk. We prove that under a mild condition, our estimator of the degrees of freedom, although biased, is consistent. Numerical evidence shows that our estimator outperforms another biased risk estimator. |

Samuel Vaiter, Gabriel Peyré, Jalal Fadili, Charles-Alban Deledalle, Charles Dossal SPARS, Lausanne, Switzerland, July 2013 (ArXiv) In this paper, we are concerned with regression problems where covariates can be grouped in nonoverlapping blocks, and where only a few of them are assumed to be active. In such a situation, the group Lasso is an at- tractive method for variable selection since it promotes sparsity of the groups. We study the sensitivity of any group Lasso solution to the observations and provide its precise local parameterization. When the noise is Gaussian, this allows us to derive an unbiased estimator of the degrees of freedom of the group Lasso. This result holds true for any fixed design, no matter whether it is under- or overdetermined. With these results at hand, various model selec- tion criteria, such as the Stein Unbiased Risk Estimator (SURE), are readily available which can provide an objectively guided choice of the optimal group Lasso fit. |

Jalal Fadili, Gabriel Peyré, Samuel Vaiter, Charles Deledalle, Joseph Salmon SampTA, Bremen, Germany, July 2013 (ArXiv) In this paper, we investigate in a unified way the structural properties of solutions to inverse problems. These solutions are regularized by the generic class of semi-norms defined as a decomposable norm composed with a linear operator, the so-called analysis type decomposable prior. This encompasses several well-known analysis-type regularizations such as the discrete total variation (in any dimension), analysis group-Lasso or the nuclear norm. Our main results establish sufficient conditions under which uniqueness and stability to a bounded noise of the regularized solution are guaranteed. Along the way, we also provide a strong sufficient uniqueness result that is of independent interest and goes beyond the case of decomposable norms. |

Xin Su, Charles-Alban Deledalle, Florence Tupin, Hong Sun IGARSS, Melbourne, Australia, July 2013 (IEEE Xplore, pdf) This paper presents a change detection method between two Synthetic Aperture Radar (SAR) images with similar incidence angles and using a likelihood ratio test (LRT). To address the composite hypothesis problem of the LRT, we propose to replace the noise-free values by their estimated results. Thus, a multi-temporal non local means denoising method proposed in [1] is used in this paper to estimate the noise-free values using both spatial and temporal information. The change detection results show the effective performance of the proposed method compared with the state of the art ones, such as log-ratio operator and generalized likelihood ratio test. |

Charles-Alban Deledalle, Samuel Vaiter, Gabriel Peyré, Jalal Fadili, Charles Dossal, In the proceedings of ICIP, Orlando, Florida, Sept-oct 2012 (IEEE Xplore, HAL, poster) In this paper, we propose a rigorous derivation of the expression of the projected Generalized Stein Unbiased Risk Estimator ($\GSURE$) for the estimation of the (projected) risk associated to regularized ill-posed linear inverse problems using sparsity-promoting L1 penalty. The projected GSURE is an unbiased estimator of the recovery risk on the vector projected on the orthogonal of the degradation operator kernel. Our framework can handle many well-known regularizations including sparse synthesis- (e.g. wavelet) and analysis-type priors (e.g. total variation). A distinctive novelty of this work is that, unlike previously proposed L1 risk estimators, we have a closed-form expression that can be implemented efficiently once the solution of the inverse problem is computed. To support our claims, numerical examples on ill-posed inverse problems with analysis and synthesis regularizations are reported where our GSURE estimates are used to tune the regularization parameter. |